Investigative

Reporting

Award

The EU Fight Against Child Pornography Stokes Fears of Widespread Online Surveillance

“The privacy advocates sound very loud. But someone must also speak for the children.” Just a few months before launching her initiative against online child pornography, in November 2021, Ylva Johansson had already prepared the debate over what would become one of the most contentious legislative proposals Brussels had seen in years. Did the EU Commissioner for Home Affairs sense that this apparently universally agreeable policy would provoke such heavy backlash from among civil society and across the political spectrum?

The Child Sexual Abuse Regulation (CSAR) plans to introduce a complex technical architecture called client-side scanning to combat the proliferation of child sexual abuse material (CSAM).

This approach relies on artificial intelligence systems for detecting images, videos and speech containing sexual abuse against minors, and attempts at grooming kids. It would require all digital platforms at risk of being used for malicious purposes – from Facebook to Telegram, Signal to Snapchat or TikTok, to data clouds and online gaming websites – to use this technology to detect and report traces of CSAM on their systems and users’ private chats. This is impossible without weakening end-to-end encryption, currently the ultimate way to secure digital communications.

‘Chat control’

Technology specialists and online rights groups were quick to warn that, behind a veil of good intentions (equipping the authorities to effectively combat the proliferation of CSAM), this “chat control” could lead to the disproportionate infringement of fundamental rights and online privacy for all EU citizens. This has fed an important opposition movement among EU legislators, both in the Parliament – where the text will pass a key test during a vote at the Civil Liberties Committee in October – and in the Council – where discussions are stalling due to opposition from a few key Member States.

Johansson, though, has not blinked once, dubbing the project as her “number one priority”. The Commissioner can boast the support of numerous child protection organisations loudly urging for the adoption of the text.

Far from a spontaneous movement, the campaign in favour of CSAR has been largely orchestrated and bankrolled by a network of entities with ties to the tech industry and security services, whose interests go far beyond child protection. Amidst the Commission’s efforts to keep a tight lid on details of the campaign, our investigation – based on dozens of interviews, leaks of internal records and documents obtained through freedom of information laws – has uncovered a coordinated communication e ort between Johansson’s cabinet and these lobby groups. Johansson has not responded to several requests for an interview.

One of the first hints at this behind-closed-doors pact lies in a letter sent by Johansson in early May 2022, a few days before launching her legislative proposal on CSAM. “We have shared many moments on the journey to this proposal. We have had extensive consultations. You have helped provide evidence that has served as the basis to draft this proposal”, wrote the Commissioner.

The previously undisclosed letter was not sent to a classic child protection organisation but to the executive director of Thorn. Despite its non-profit status, this American organisation also has a for-profit operation, selling artificial intelligence (AI) technologies which can identify child sexual abuse images online.

Thorn’s tools, such as Safer, are already used by companies such as Vimeo, Flickr and OpenAI – the creator of ChatGPT. Its clients also include law enforcement agencies across the globe, like the US Department of Homeland Security, which has spent $4.3 million for their software licences since 2018. Should “client-side scanning” for CSAM be imposed on all major platforms in the EU, Thorn could come out as one of the main beneficiaries of the new regulation – along with other tech companies which it has already partnered with, such as Amazon or Microsoft.

Hollywood celebrity lobbyist

Thorn has pushed for this regulation long before it was put down on paper. The company has hired high-profile lobbyists, including FGS Global, a major lobby rm with considerable access to the Brussels powerbrokers, to which it has paid more than €600,000 in representation costs for the year 2022 alone.

But Thorn has also relied on its own resources. Its board has long been chaired by Hollywood star Ashton Kutcher, who co-founded the company with Demi Moore in 2012, and whose multiple invesments in AI companies could stand to benefit from the development of a market for CSAM surveillance. The actor was forced to step down from his position at Thorn on September 14th, amid indignation over his support for a fellow actor accused – and now convicted – of raping two women. For years though, access to the top echelons in Brussels has never been an issue, both for Kutcher and for Thorn, thanks to the actor’s star power and tear-jerking speeches.

Kutcher and Johansson were the key speakers at a summit organised and moderated by an enthusiastic Eva Kaili, then the Vice President of the European Parliament, in November 2022; before she was removed from her position following corruption charges against her in December. The actor also addressed lawmakers in Brussels in March 2023, attempting to appease concerns about the possible misuse and failures of existing technology – his speech was “highly misleading in terms of what the technology can do”, according to an internal document from European Digital Rights, an association of civil and human rights organisations.

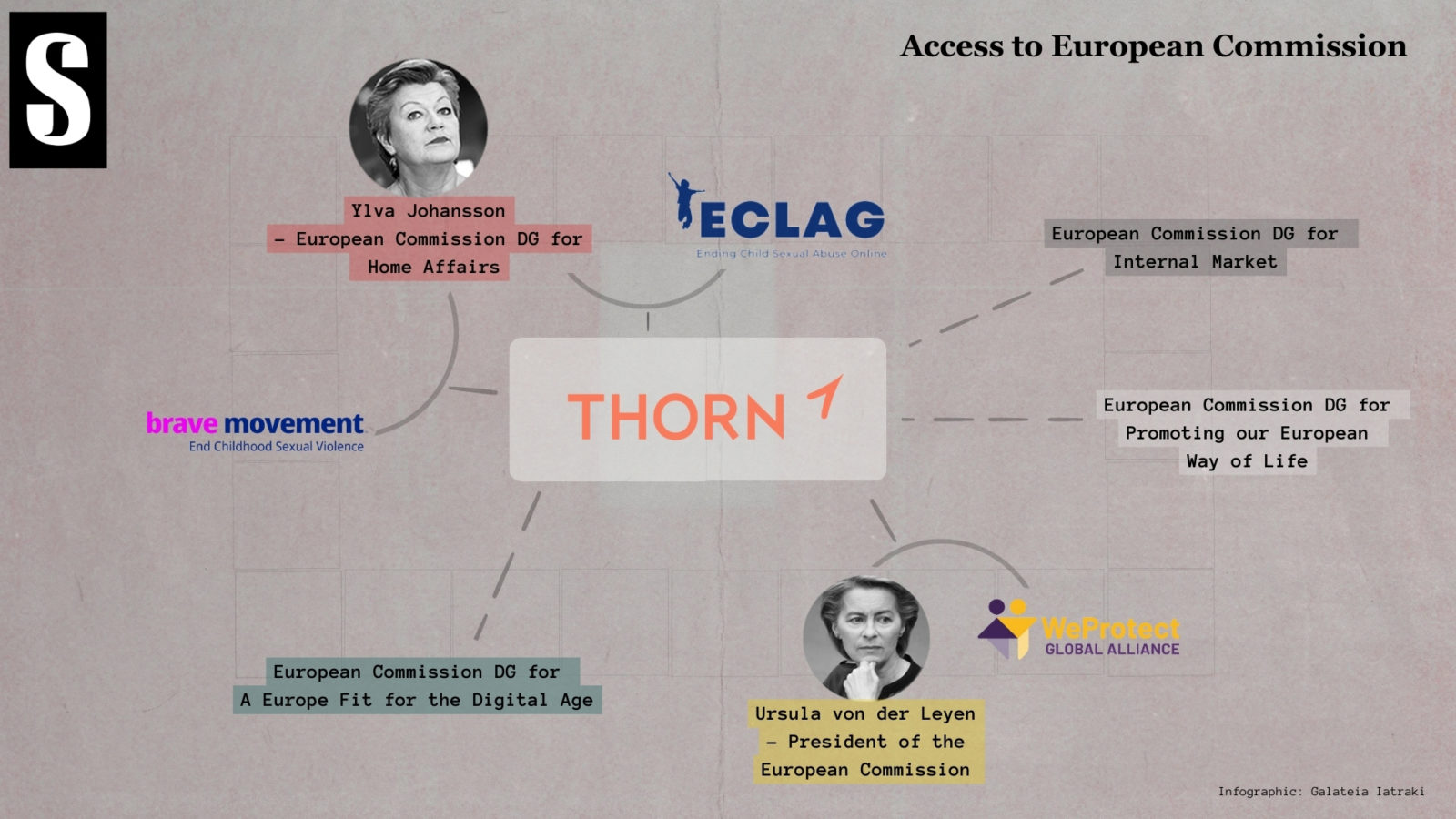

Throughout the preparation process, Thorn was also granted close access to EU legislators behind the doors. The organisation, listed as a charity in EU transparency registers, met with cabinet members of every top Commission official with any say on security or digital policy, including Margrethe Vestager, Margaritis Schinas, and Thierry Breton. Ursula Von der Leyen, the President of the European Commission, was one of the first to be briefed about the plans for fighting child sexual abuse in a video conference attended by Ashton Kutcher, as early as November 2020.

Author: Galateia_Iatraki_SOLOMON

A generous offer

Thorn’s main point of contact was, however, Johansson’s team. Emails released by the Commission after a long struggle reveal a close and continuous working relationship between the two parties in the months following the rollout of the CSAR proposal, with the Commission repeatedly facilitating Thorn’s access to crucial decision-making venues attended by member states’ ministers and representatives.

A few days following the presentation of Johansson’s proposal, Thorn representatives sat down with one of the Commissioner’s cabinet members and expressed a “willingness to collaborate closely”. Not only did they o er help to prepare “communication material on online child sexual abuse”, but they also made an o er of service to “provide expertise” for “the creation of the database of indicators” to be hosted by the future EU Centre to Prevent and Combat Child Sexual Abuse.

Thorn is among the most vocal stakeholders advocating for the creation of this new body which would vet and approve scanning technologies, as well as then purchase and offer them to small and medium companies. Thorn’s offer to support building its database reads as a generous pro bono offer, and it would be – if major commercial interests were not at stake.

Abdication of corporate responsibility and ‘false positives’

“What I don’t think governments understand is just how expensive and fallible these systems are”, warned Meredith Whittaker, the president of the Signal Technology Foundation, a US non-profit which runs an encrypted chat application which prevents anyone from collecting or reviewing user data, which has no interest in seeing encryption techniques weakened. “We’re looking at [a cost of] hundreds of millions of dollars indefinitely due to the scale that this is being proposed at”, added Whittaker, who compared this policy to offering tech companies a “get out of responsibility free card”, by saying “you pay us and we will do whatever it is to magically clean up this problem”.

This scepticism has been shared by a growing number of stakeholders. “Current technologies lead to a high number of false positives”, said a spokesperson for the Dutch government, suggesting that many users might be needlessly reported to the authorities, overburdening law enforcement agencies. In a severe blow to the advocates of this solution, Apple announced in Summer 2023 that it is impossible to implement CSAM scanning while preserving the privacy and security of digital communications. A few weeks later, UK officials privately admitted that there is no existing technology able to scan end-to-end encrypted messages without undermining user privacy.

Matthew Daniel Green, a security technologist at John Hopkins University, also warned that AI-driven scanning technology could expose digital platforms to malicious attacks: “If you touch upon built-in encryption models, then you introduce vulnerabilities. This could endanger the kids themselves, by opening the way for predators to hack accounts in searching for images.”

Suspicions of mixing interests

While Thorn played a central role in framing the Commission’s proposal, a tight and intricate network of players has stepped into Brussels’ offices to build consensus around it. Among them is a discrete but highly influential organisation called WeProtect Global Alliance. Registered as a “foundation” at an unassuming residential address in the tiny Dutch town of Lisse, the Alliance aims to “develop policies and solutions to protect children from sexual exploitation and abuse online”. However, it is no ordinary civil society group.

Launched as a government initiative by EU, UK and US authorities, WeProtect was transformed into a supposedly independent organisation in the spring of 2020, as efforts to push for legislative initiatives to tackle CSAM with client-side scanning technology were picking up. Its membership includes not only NGOs, including Thorn, but also tech companies, like Snap or Palantir, and dozens of governments.

The EU Commission has endorsed WeProtect as “the central organisation for coordinating and streamlining global e orts and regulatory improvements” in the fight against online child sexual abuse. Johansson’s Directorate-General for Migration and Home Affairs (DG Home) granted the organisation almost €1 million to organise a summit in Brussels dedicated to the fight against CSAM, as well as activities to enhance law enforcement collaboration.

One of DG Home’s most senior officials, Antonio Labrador Jimenez, who has played a central role in drafting and promoting the CSAR proposal, has officially been part of WeProtect’s board since July 2020, though documents suggest that his involvement dates as far back as 2019. Although Mr. Labrador Jimenez “does not receive any kind of compensation”, his holding this position raises serious questions about how the Commission uses WeProtect to promote Johannson’s proposal. When Labrador Jimenez briefed his fellow board members about the proposed regulation in July 2022, notes from the meeting show that “the Board discussed the media strategy of the legislation”.

WeProtect has not answered questions regarding its funding arrangements with the Commission nor to what extent its advocacy strategies are shaped by stakeholders sitting on its policy board. This question is all the more important as WeProtect’s board also includes representatives of powerful security agencies, such as Stephen Kavanagh, the Executive Director for Police Services of INTERPOL, or Lt. Colonel Dana Humaid Al Marzouqi, a high-ranking United Arab Emirates’ Interior Ministry official who chairs or participates in numerous international police task forces.

Trojan horse hypothesis

Ross Anderson, professor of Security Engineering at Cambridge University, says the missing picture in the current debate is precisely the role of law enforcement agencies, who he suspects expect to undermine encryption by letting child protection organisations lead the charge in Brussels.

“The security and intelligence community have always used issues that scare lawmakers, like children and terrorism, to undermine online privacy.” They have, Anderson said, “told EU policymakers that once the machinery has been built to target CSAM, it is entirely a policy decision to target terrorism too. We all know how this works, and come the next terrorist attack, no lawmaker will oppose the extension of scanning from child abuse to serious violent and political crimes.

This concern is shared by Wojciech Wiewiórowski, the EU’s Data Protection Supervisor, who told Le Monde and its partners that the proposal would amount to “crossing the Rubicon” towards the mass surveillance of EU citizens if this technology were to be used in wider contexts.

These warnings are not mere speculations. In July 2022, the head of DG Home met Europol’s Executive Director, Catherine de Bolle, to discuss Johansson’s proposal and the EU law enforcement agency’s contribution to the fight against CSAM. According to the minutes of the meeting Europol oated the idea of using the proposed EU Centre to scan for more than just CSAM. “There are other crime areas that would benefit from detection,” Europol told the Commission official, who “signalled understanding for the additional wishes” but ” flagged the need to be realistic in terms of what could be expected, given the many sensitivities around the proposal.”

Research by Imperial College academics Ana-Maria Cretu and Shubham Jain showed how client-side scanning systems could be discreetly tweaked to perform facial recognition from the devices of unaware users, cautioning that there might be more vulnerabilities that we don’t know of yet. “Once this technology is rolled out to billions of devices across the world, you can’t take it back”, they warned.

Intense lobbying by ‘survivors’

When the regulation proposal came under attack by privacy experts, a nearly unknown group came to the rescue. The Brave Movement, “a survivor-centred global movement campaigning to end childhood sexual violence”, emerged only a few weeks before the text rolled out, and soon became a key ally for Johansson.

In April 2022, the organisation hosted the Commissioner in an online “Global Survivors Summit”. A year later, she joined a photo-op in front of the European Parliament during the “Brave Movement Action Day”, along with a group of survivors gathered to “demand EU leaders be brave and act to protect millions of children at risk from the violence and trauma they faced”. During that period, Brave recruited its new Europe Campaign Manager, Jessica Airey, from Johansson’s department, where Ms Airey had worked as an intern to promote the CSAR proposal for ve months.

An internal strategy note during the course of this investigation leaves no doubts about the Brave Movement’s central role as an advocacy instrument to advance consensus around Johansson’s proposal. “The main objective of the (.) mobilisation around this proposed legislation is to see it passed” and to “create a positive precedent for other countries which we will invite to follow through with similar legislation”, wrote the organisation.

This November 2022 note suggests that Brave has played a key role in lobbying officials both in Brussels and in EU member-states. The organisation has, for instance, built a strong relationship with Conservative MEP Javier Zarzalejos, a supporter of Johansson’s proposal who leads negotiations at the Parliament level. The document reveals that Zarzalejos “asked for strong survivors’ mobilisation in key countries like Germany”, one of the “potential blockers in the negotiations”. Brave has also met with the French Junior Minister for Child Protection, Charlotte Caubel, in an effort to turn France – already in favour of the text – into “a Champion for the legislation.”

A generous American sponsor

Obviously, preventing the circulation of CSAM is a common goal for all child protection organisations. Not all, however, approve of the approach proposed by Johansson. For instance, the German Child Protection Association argues that “relying on purely technical solutions to protect children from sexualized violence online is a fatal mistake with devastating consequences for the fundamental democratic rights of all people”. Scanning private communications would “deeply interfere with the fundamental rights of children and young people”, added the association.

Dissenting voices like this one have struggled to make themselves heard in the face of the powerful coalition of pro-CSAR organisations grouped together under the banner of the European Child Sexual buse Legislation Advocacy Group (ECLAG). This coordination platform, launched immediately after Johansson’s proposal, brings together renowned children’s rights organisations (Terre des hommes, Missing Children) with Thorn, the Brave Movement or the Internet Watch Foundation (a UK non-profit which produces technology to identify CSAM and is funded by some of the biggest players of the internet industry).

In addition to close access to Johansson’s team, ECLAG members have received financial support from an American charity called the Oak Foundation. Headed by an ex-US State Department official, the foundation has granted them at least €24 million since 2019. In addition to supporting Thorn ($5.3 million), the foundation donated $10.3 million for the creation of the Brave Movement, and $1.5 million to Purpose Europe, a UK-based consultancy controlled by French giant Capgemini, which has worked with multiple ECLAG organisations and held meetings with Johansson’s cabinet.

A spokesperson for the Oak Foundation, who has a long-term commitment to helping NGOs that fight child abuse throughout the world, said that the foundation supports organisations that “advocate for new policies, with a specific focus in the EU, US, and UK, where opportunities exist to establish a precedent for other governments”. However, it did not address privacy concerns raised by the CSAR proposal.

Struggling for transparency

Throughout this investigation, our reporters have found it very difficult to obtain internal European Commission documents related to the proposed CSAR regulation, in spite of the EU’s freedom of information law. While the Commission’s failure to deal with document access requests within statutory deadlines is not uncommon, requests fled as early as December 2022 have gone unanswered for months.

It ended up being necessary to ask the European Ombudsman to intervene in order to get the frst series of documents released. But several requests about email exchanges and the minutes of meetings between senior Commission officials from Ylva Johannson’s cabinet and private lobby groups like Thorn and WeProtect were rejected. For example, the Commission refused to disclose Cordua’s email in reply to Johannson’s message in May 2022, as well as a “policy one pager” Thorn shared, after Thorn, despite its charity status, argued that “the disclosure of the information contained therein would undermine the organization’s commercial interest”.

The European Ombudsman is currently investigating four separate complaints filed by the reporting team regarding the Commission’s failure to fulfill its transparency obligations. The Commission has thus far failed to respond to the Ombudsman’s request for information regarding two of the complaints that were submitted in July.

‘A commercial interest’

Ylva Johansson’s close dialogue with this network of organisations and tech companies is all the more remarkable in light of her silence shown to other stakeholders. The European Digital Rights has repeatedly complained about the lack of consideration from Johansson, who has never received them. The same frustration is shown by O imits. Hosted in a bright office room at the edge of Amsterdam’s famous red light district hosts, this organisation is Europe’s oldest hotline for kids and adults wanting to report abuse, whether happening behind closed doors or seen on a video circulating online. Every day, its seven analysts view thousands of reports and images, to assess whether they are abusive or illegal.

While Off limits’ legitimacy is unquestioned, Arda Gerkens, its former director (2015-September 2023), has found herself in front of a wall of silence since Ylva Johansson launched her proposal. “In the past years, Commissioner Johansson and her sta visited the Silicon Valley and big North American groups”, explained Gerkens: “I invited her here, but she never came.”

“Who will benefit from the legislation?”, Gerkens asked rhetorically. “Not the children”.

Instead, she believes that Johansson’s proposal is excessively “influenced by companies pretending to be NGOs, but acting more like tech companies”. “Groups like Thorn”, she added, “use everything they can to put this legislation forward, not just because they feel that this is the way forward to combat sexual child abuse, but also because they have a commercial interest in doing so.”

Struggling for transparency

Throughout this investigation, our reporters have found it very difficult to obtain internal European Commission documents related to the proposed CSAR regulation, in spite of the EU’s freedom of information law. While the Commission’s failure to deal with document access requests within statutory deadlines is not uncommon, requests fled as early as December 2022 have gone unanswered for months.

It ended up being necessary to ask the European Ombudsman to intervene in order to get a first series of documents released. But several requests about email exchanges and the minutes of meetings between senior Commission officials from Ylva Johannson’s cabinet and private lobby groups like Thorn and WeProtect were rejected. For example, the Commission refused to disclose Cordua’s email in reply to Johannson’s message in May 2022, as well as a “policy one pager” Thorn shared, after Thorn, despite its charity status, argued that “the disclosure of the information contained therein would undermine the organization’s commercial interest”.

The European Ombudsman is currently investigating four separate complaints filed by the reporting team regarding the Commission’s failure to fulfill its transparency obligations. The Commission has thus far failed to respond to the Ombudsman’s request for information regarding two of the complaints that were submitted in July.

Credits:

Maxime Vaudano – Investigations editor

Dusica Tomovic – Managing Editor

Ivana Nikolic – Program Manager

Matt Robinson – Copy Editor

Ivana Jeremic – Fact Checker

Igor Vujcic – Illustrator

Ilianna Papangeli – Managing Director

Stavros Malichudis – Chief Editor

Galatia Iatraki – Illustrator

Kai Bierman – Investigations editor

Andrés Gil – Foreign desk editor

Mariangela Paone – Special envoy

Evert De Vos – Editor

Xandra Schutte – Director

Olivia Ettema – Illustration

Lorenzo Bagnoli – Editor-in-chief

Antonella Napolitano – Impact editor

Francesca De Benedetti – Foreign news editor

Andre Meister – Editor

Funding partner:

European Journalism Center

Zlatina Siderova – Project leader

Juliette Gerbais – Project assistant